hdp 整合impala

访问量:2644

创建时间:2020-06-10

建议,我这里hdp为2.6.5,impala安装2.12版本的报错方法找不到,查找相关方法在高版本hadoop中存在,hdp2.6.5中没有,安装impala2.4,后正常运行。

配置yum源

[root@ecs-hdp-dev-ambari ~]# yum install nginx

[root@ecs-hdp-dev-ambari ~]# vim /etc/nginx/nginx.conf

root /var/www/html; #修改root目录

#配置cdh yum源

[root@ecs-hdp-dev-ambari html]# vim /etc/yum.repos.d/cdh.repo

[cloudera-cdh5]

# Packages for Cloudera's Distribution for Hadoop, Version 5, on RedHat or CentOS 7 x86_64

name=Cloudera's Distribution for Hadoop, Version 5

baseurl=https://archive.cloudera.com/cdh5/redhat/7/x86_64/cdh/5/

gpgkey =https://archive.cloudera.com/cdh5/redhat/7/x86_64/cdh/RPM-GPG-KEY-cloudera

gpgcheck = 1

#reposync过程较慢,可以使用screen同步

[root@ecs-hdp-dev-ambari html]# reposync cloudera-cdh5

[root@ecs-hdp-dev-ambari html]# createrepo cloudera-cdh5/

Spawning worker 0 with 31 pkgs

Spawning worker 1 with 31 pkgs

Spawning worker 2 with 31 pkgs

Spawning worker 3 with 31 pkgs

Workers Finished

Saving Primary metadata

Saving file lists metadata

Saving other metadata

Generating sqlite DBs

Sqlite DBs complete

#添加所有hdp集群机器的yum源(在所有机器上执行)

[root@name1 html]# vim /etc/yum.repos.d/cdh.repo

[cloudera-cdh5]

# Packages for Cloudera's Distribution for Hadoop, Version 5, on RedHat or CentOS 7 x86_64

name=Cloudera's Distribution for Hadoop, Version 5

baseurl=http://172.16.96.62/cloudera-cdh5/

gpgcheck = 0

enabled=1

[root@ecs-hdp-dev-ambari html]# systemctl start nginx

配置ambari

#查看集群版本

[root@name1 html]# hdp-select status hadoop-client

hadoop-client - 2.6.5.0-292

#进入对应ambari版本的hdp目录

[root@ecs-hdp-dev-ambari html]# cd /var/lib/ambari-server/resources/stacks/HDP/2.6/services

[root@ecs-hdp-dev-ambari services]# git clone https://github.com/cas-bigdatalab/ambari-impala-service.git IMPALA

Cloning into 'IMPALA'...

remote: Enumerating objects: 283, done.

remote: Total 283 (delta 0), reused 0 (delta 0), pack-reused 283

Receiving objects: 100% (283/283), 516.21 KiB | 56.00 KiB/s, done.

Resolving deltas: 100% (142/142), done.

#重启ambari服务

[root@ecs-hdp-dev-ambari services]# ambari-server restart

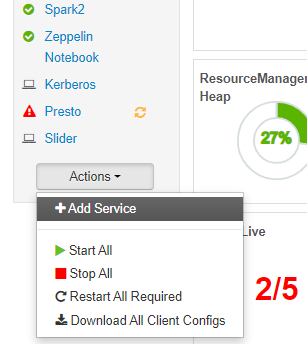

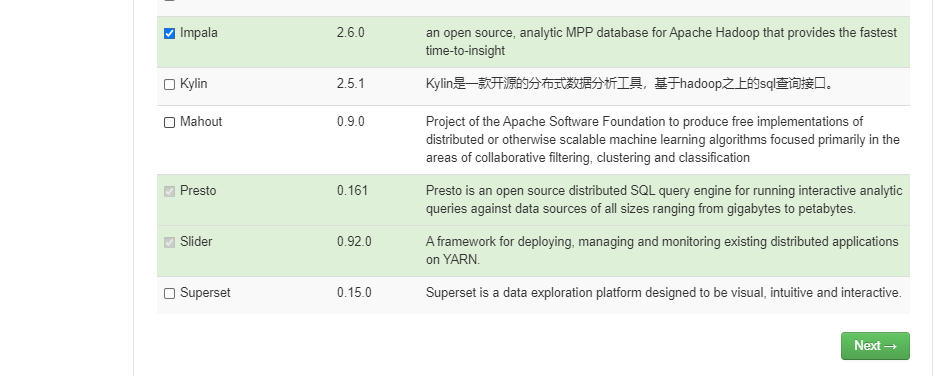

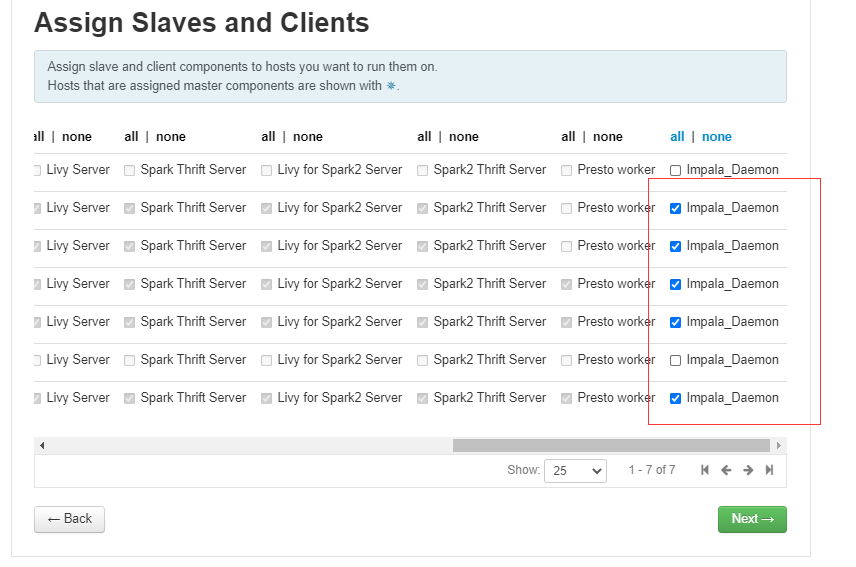

通过ambari控制台安装impala

在Install, Start and Test是各种key error,配置ssh无密码访问。后面就是next即可

处理启动故障 1

查看日志:

[root@name2 impala]# pwd

/var/log/impala

[root@name2 impala]# cat impala-state-store.log

Unable to find Java. JAVA_HOME should be set in /etc/default/bigtop-utils

Unable to find Java. JAVA_HOME should be set in /etc/default/bigtop-utils

```shell

[root@node3 ~]# vim /etc/default/bigtop-utils

export JAVA_HOME=/usr/jdk64/jdk1.8.0_112

处理启动故障 2

查看impala deamon节点日志:

[root@node2 impala]# tail -f impalad.ERROR

Log file created at: 2020/06/10 14:17:05

Running on machine: node2.hdp.work.com

Log line format: [IWEF]mmdd hh:mm:ss.uuuuuu threadid file:line] msg

E0610 14:17:05.607198 25912 logging.cc:121] stderr will be logged to this file.

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

E0610 14:17:12.706774 25912 authentication.cc:176] SASL message (Kerberos (internal)): No worthy mechs found

E0610 14:17:15.709295 25912 authentication.cc:176] SASL message (Kerberos (internal)): No worthy mechs found

E0610 14:17:36.721410 25912 authentication.cc:176] SASL message (Kerberos (internal)): No worthy mechs found

E0610 14:17:39.721897 25912 authentication.cc:176] SASL message (Kerberos (internal)): No worthy mechs found

E0610 14:17:39.722074 25912 impalad-main.cc:92] Impalad services did not start correctly, exiting. Error: Couldn't open transport for name2.hdp.work.com:24000 (SASL(-4): no mechanism available: No worthy mechs found)

Statestore subscriber did not start up.

[root@node2 impala]# tail -f impalad.INFO

E0610 14:14:19.483218 15485 authentication.cc:176] SASL message (Kerberos (internal)): No worthy mechs found

I0610 14:14:19.483361 15485 thrift-client.cc:94] Unable to connect to name2.hdp.work.com:24000

I0610 14:14:19.483366 15485 thrift-client.cc:100] (Attempt 9 of 10)

E0610 14:14:22.491096 15485 authentication.cc:176] SASL message (Kerberos (internal)): No worthy mechs found

I0610 14:14:22.491261 15485 thrift-client.cc:94] Unable to connect to name2.hdp.work.com:24000

I0610 14:14:22.491269 15485 thrift-client.cc:100] (Attempt 10 of 10)

I0610 14:14:22.491289 15485 statestore-subscriber.cc:238] statestore registration unsuccessful: Couldn't open transport for name2.hdp.work.com:24000 (SASL(-4): no mechanism available: No worthy mechs found)

E0610 14:14:22.495311 15485 impalad-main.cc:92] Impalad services did not start correctly, exiting. Error: Couldn't open transport for name2.hdp.work.com:24000 (SASL(-4): no mechanism available: No worthy mechs found)

Statestore subscriber did not start up.

#安装依赖包,在ambari中重新启动对应进程

[root@node2 impala]# yum install cyrus-sasl-plain cyrus-sasl-devel cyrus-sasl-gssapi cyrus-sasl-md5

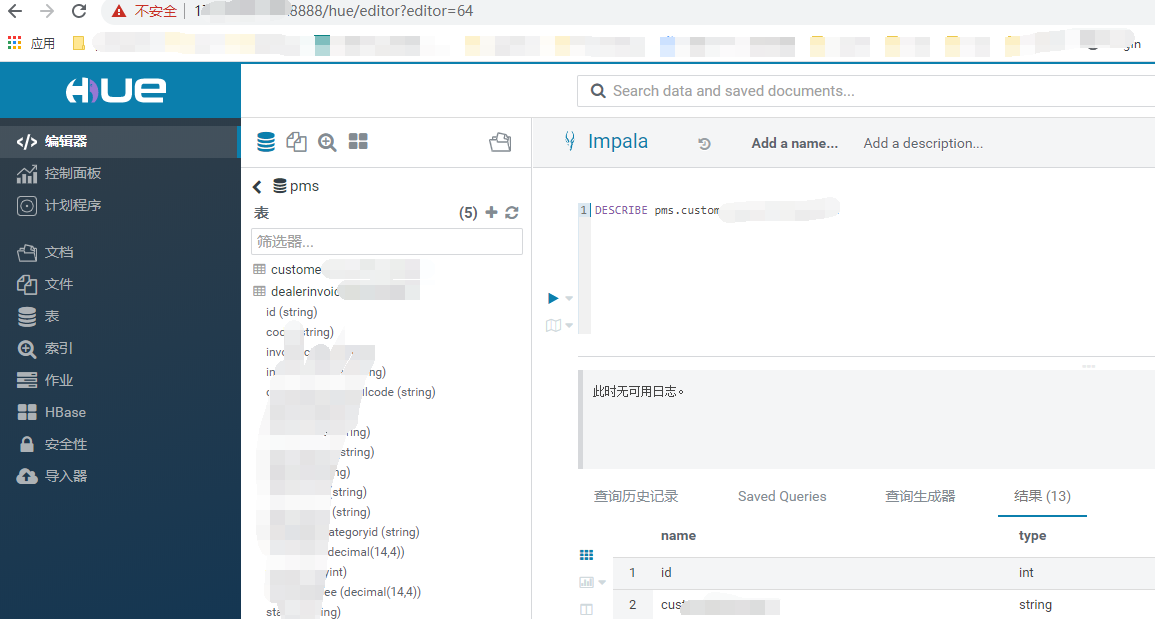

hue配置impala

修改hue的配置文件,我这里的hue版本是4.7.1,3版本应该也可以(docker安装可以进入docker修改)

[root@name1 hue-4.7.1]# pwd

/hadoop/hue/hue-4.7.1

[root@name1 hue-4.7.1]# vim desktop/conf/hue.ini

[impala]

server_host=name2.work.com

server_port=21050

#如果你的hadoop集群没有开启kerberos,下面2项目不用配置。

use_sasl=true

impala_principal=impala/_HOST@work.com

#配置说明server_host,你的imapala安装的机器,server_port,impala监听端口,impala_principal=impala/_HOST@xxx特定格式,xxx替换为你的kdc realms

在hue中简单测试impala是否正常:

故障处理3,hue中select时报错如下(2.6版本impala报错):

AnalysisException: Failed to load metadata for table: 'customeraccounthisdetail' CAUSED BY: TableLoadingException: java.lang.NoClassDefFoundError: Could not initialize class com.cloudera.impala.catalog.HBaseTable CAUSED BY: ExecutionException: java.lang.NoClassDefFoundError: Could not initialize class com.cloudera.impala.catalog.HBaseTable CAUSED BY: NoClassDefFoundError: Could not initialize class com.cloudera.impala.catalog.HBaseTable

或者:

AnalysisException: java.lang.NoClassDefFoundError: org/apache/hadoop/hbase/HBaseConfiguration CAUSED BY: ExecutionException: java.lang.NoClassDefFoundError: org/apache/hadoop/hbase/HBaseConfiguration CAUSED BY: NoClassDefFoundError: org/apache/hadoop/hbase/HBaseConfiguration CAUSED BY: ClassNotFoundException: org.apache.hadoop.hbase.HBaseConfiguration CAUSED BY: TableLoadingException: java.lang.NoClassDefFoundError: org/apache/hadoop/hbase/HBaseConfiguration CAUSED BY: ExecutionException: java.lang.NoClassDefFoundError: org/apache/hadoop/hbase/HBaseConfiguration CAUSED BY: NoClassDefFoundError: org/apache/hadoop/hbase/HBaseConfiguration CAUSED BY: ClassNotFoundException: org.apache.hadoop.hbase.HBaseConfiguration

处理如下:(注意impala要配合cdh的hbase的jar包,将cdh的hbase的对应版本的jar包cp过来,创建软连接,下面这个是用hdp的hbase包,启动会报错,过程只作参考,用cdh的hbase的4个jar包)

[root@client1 ~]# cp /usr/hdp/2.6.5.0-292/hbase/lib/hbase-annotations-1.1.2.2.6.5.0-292.jar /usr/lib/impala/lib/

[root@client1 ~]# cp /usr/hdp/2.6.5.0-292/hbase/lib/hbase-client-1.1.2.2.6.5.0-292.jar /usr/lib/impala/lib/

[root@client1 ~]# cp /usr/hdp/2.6.5.0-292/hbase/lib/hbase-common-1.1.2.2.6.5.0-292.jar /usr/lib/impala/lib/

[root@client1 ~]# cp /usr/hdp/2.6.5.0-292/hbase/lib/hbase-protocol-1.1.2.2.6.5.0-292.jar /usr/lib/impala/lib/

[root@client1 ~]# cd !$

cd /usr/lib/impala/lib/

[root@client1 lib]# ll hbase-*

-rw-r--r-- 1 root root 20807 Jun 16 15:01 hbase-annotations-1.1.2.2.6.5.0-292.jar

lrwxrwxrwx 1 root root 36 Jun 16 13:38 hbase-annotations.jar -> /usr/lib/hbase/hbase-annotations.jar

-rw-r--r-- 1 root root 1407871 Jun 16 15:01 hbase-client-1.1.2.2.6.5.0-292.jar

lrwxrwxrwx 1 root root 31 Jun 16 13:38 hbase-client.jar -> /usr/lib/hbase/hbase-client.jar

-rw-r--r-- 1 root root 577452 Jun 16 15:01 hbase-common-1.1.2.2.6.5.0-292.jar

lrwxrwxrwx 1 root root 31 Jun 16 13:38 hbase-common.jar -> /usr/lib/hbase/hbase-common.jar

-rw-r--r-- 1 root root 4988181 Jun 16 15:01 hbase-protocol-1.1.2.2.6.5.0-292.jar

lrwxrwxrwx 1 root root 33 Jun 16 13:38 hbase-protocol.jar -> /usr/lib/hbase/hbase-protocol.jar

[root@client1 lib]# ln -s hbase-annotations-1.1.2.2.6.5.0-292.jar hbase-annotations.jar

ln: failed to create symbolic link ‘hbase-annotations.jar’: File exists

[root@client1 lib]# rm hbase-annotations.jar

rm: remove symbolic link ‘hbase-annotations.jar’? y

[root@client1 lib]# rm hbase-client.jar

rm: remove symbolic link ‘hbase-client.jar’? y

[root@client1 lib]# rm hbase-common.jar

rm: remove symbolic link ‘hbase-common.jar’? y

[root@client1 lib]# rm hbase-protocol.jar

rm: remove symbolic link ‘hbase-protocol.jar’? y

[root@client1 lib]# ln -s hbase-annotations-1.1.2.2.6.5.0-292.jar hbase-annotations.jar

[root@client1 lib]# ln -s hbase-client-1.1.2.2.6.5.0-292.jar hbase-client.jar

[root@client1 lib]# ln -s hbase-common-1.1.2.2.6.5.0-292.jar hbase-common.jar

[root@client1 lib]# ln -s hbase-protocol-1.1.2.2.6.5.0-292.jar hbase-protocol.jar

[root@client1 lib]# ll hbase-*

-rw-r--r-- 1 root root 20807 Jun 16 15:01 hbase-annotations-1.1.2.2.6.5.0-292.jar

lrwxrwxrwx 1 root root 39 Jun 16 15:02 hbase-annotations.jar -> hbase-annotations-1.1.2.2.6.5.0-292.jar

-rw-r--r-- 1 root root 1407871 Jun 16 15:01 hbase-client-1.1.2.2.6.5.0-292.jar

lrwxrwxrwx 1 root root 34 Jun 16 15:03 hbase-client.jar -> hbase-client-1.1.2.2.6.5.0-292.jar

-rw-r--r-- 1 root root 577452 Jun 16 15:01 hbase-common-1.1.2.2.6.5.0-292.jar

lrwxrwxrwx 1 root root 34 Jun 16 15:03 hbase-common.jar -> hbase-common-1.1.2.2.6.5.0-292.jar

-rw-r--r-- 1 root root 4988181 Jun 16 15:01 hbase-protocol-1.1.2.2.6.5.0-292.jar

lrwxrwxrwx 1 root root 36 Jun 16 15:03 hbase-protocol.jar -> hbase-protocol-1.1.2.2.6.5.0-292.jar

[root@client1 lib]#

#重启impala

故障处理4,No usable temporary files: space could not be allocated on any tempor

处理方法一:

[root@hdpprdd02 ~]# ll /tmp/impala_scratch

ls: cannot access /tmp/impala_scratch: No such file or directory

[root@hdpprdd02 ~]# ll /hadoop01/tmp/impala_scratch

total 0

[root@hdpprdd02 ~]# mkdir -pv /tmp/impala_scratch

mkdir: created directory ‘/tmp/impala_scratch’

[root@hdpprdd02 ~]# chmod 777 /tmp/impala_scratch

处理方法二:

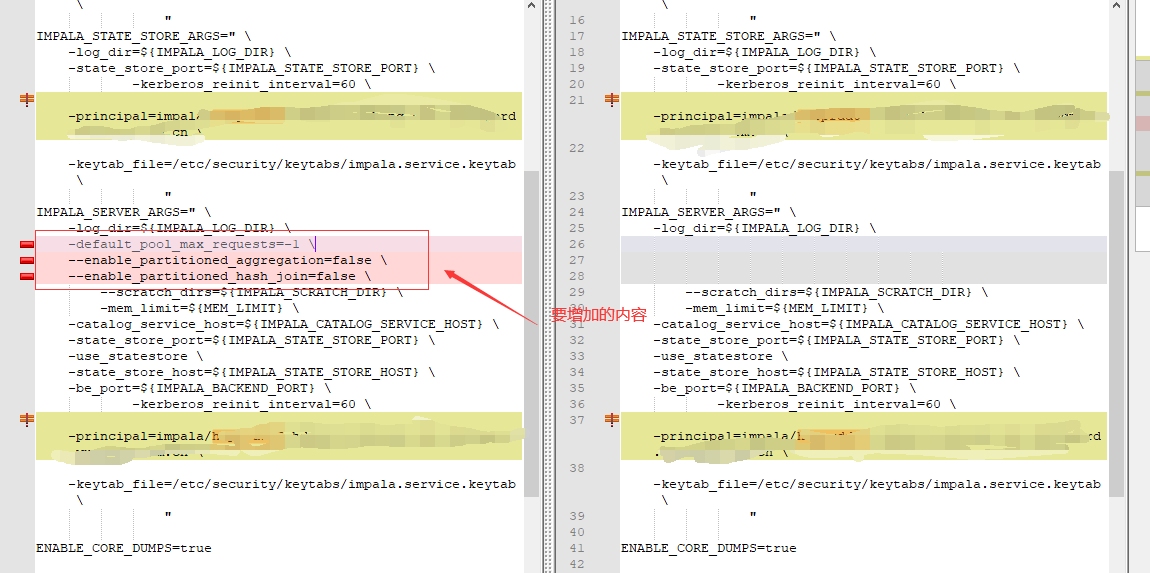

修改impala的配置文件/etc/default/impala(注意本人尝试在hdp的ambari中修改,但是不生效。)

故障处理5,Memory limit exceeded

处理方法:修改相关内存参数。

修改impala的配置文件/etc/default/impala(注意本人尝试在hdp的ambari中修改,但是不生效)

登陆评论:

使用GITHUB登陆