k8s 高可用安装 (kadmin keepalived haproxy 高可用)

说明

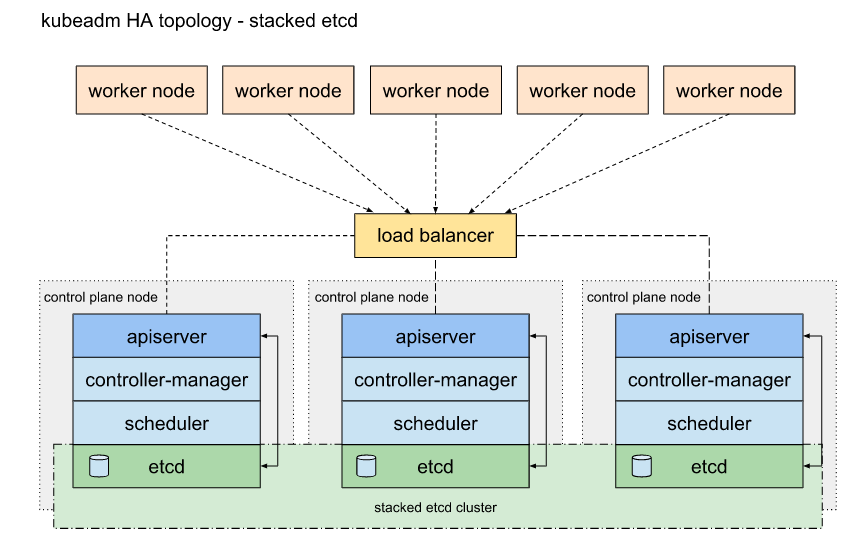

这里选择堆叠(Stacked) etcd 拓扑 堆叠(Stacked) HA 集群是一种这样的拓扑,其中 etcd 分布式数据存储集群堆叠在 kubeadm 管理的控制平面节点上,作为控制平面的一个组件运行。

每个控制平面节点运行 kube-apiserver,kube-scheduler 和 kube-controller-manager 实例。 kube-apiserver 使用负载均衡器暴露给工作节点。

每个控制平面节点创建一个本地 etcd 成员(member),这个 etcd 成员只与该节点的 kube-apiserver 通信。这同样适用于本地 kube-controller-manager 和 kube-scheduler 实例。

这种拓扑将控制平面和 etcd 成员耦合在同一节点上。相对使用外部 etcd 集群,设置起来更简单,而且更易于副本管理。

然而,堆叠集群存在耦合失败的风险。如果一个节点发生故障,则 etcd 成员和控制平面实例都将丢失,并且冗余会受到影响。您可以通过添加更多控制平面节点来降低此风险。

因此,您应该为 HA 集群运行至少三个堆叠的控制平面节点。

这是 kubeadm 中的默认拓扑。当使用 kubeadm init 和 kubeadm join --control-plane 时,在控制平面节点上会自动创建本地 etcd 成员。

准备工作:

| IP地址 | hostname | 节点 |

|---|---|---|

| 192.168.31.132 | vm-192-168-31-132.mydomain.com.cn | master node |

| 192.168.31.133 | vm-192-168-31-133.mydomain.com.cn | master node |

| 192.168.31.134 | vm-192-168-31-134.mydomain.com.cn | master node |

| 192.168.31.135 | vm-192-168-31-135.mydomain.com.cn | work node |

| 192.168.31.136 | vm-192-168-31-136.mydomain.com.cn | work node |

| 192.168.31.131 | k8s-api-lb.mydomain.com.cn | 高可用vip地址 |

- 配置 主机 名称与/etc/hosts

# 五台主机都需要设置,这里省略其他4台机器设置

[root@localhost ~]# hostnamectl set-hostname vm-192-168-31-132.mydomain.com.cn

[root@localhost ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.31.132 vm-192-168-31-132.mydomain.com.cn

192.168.31.133 vm-192-168-31-133.mydomain.com.cn

192.168.31.134 vm-192-168-31-134.mydomain.com.cn

192.168.31.135 vm-192-168-31-135.mydomain.com.cn

192.168.31.136 vm-192-168-31-136.mydomain.com.cn

192.168.31.131 k8s-api-lb.mydomain.com.cn

- 设置yum源

wget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- 配置防火墙\selinux\sysctl.conf

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

swapoff -a

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

sysctl -p

- 安装依赖包

yum install -y yum-utils device-mapper-persistent-data lvm2 nfs-utils

安装高可用组件 haproxy keepalived

在132\133机器安装(可以在集群外部2台机器安装,这里为了节省资源就在2台master上安装)

# 注意其他机器的priority都不能相同,用来进行集群选举

yum -y install haproxy keepalived

[root@vm-192-168-31-132 ~]# cat /etc/keepalived/keepalived.conf

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.31.131

}

track_script {

check_haproxy

}

}

[root@vm-192-168-31-132 ~]# cat /etc/keepalived/check_haproxy.sh

#!/bin/bash

systemctl status haproxy > /dev/null

if [[ $? != 0 ]];then

echo "haproxy is down, stop keepalived"

systemctl stop keepalived

fi

[root@vm-192-168-31-132 ~]# cat /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend k8s-api

bind *:8443

mode tcp

default_backend apiserver

backend apiserver

balance roundrobin

mode tcp

server k8s-m1 192.168.31.132:6443 check weight 1 maxconn 2000 check inter 2000 rise 2 fall 3

server k8s-m2 192.168.31.133:6443 check weight 1 maxconn 2000 check inter 2000 rise 2 fall 3

server k8s-m3 192.168.31.134:6443 check weight 1 maxconn 2000 check inter 2000 rise 2 fall 3

[root@vm-192-168-31-132 ~]# systemctl enable --now keepalived haproxy

[root@vm-192-168-31-132 ~]# systemctl start haproxy

[root@vm-192-168-31-132 ~]# systemctl start keepalived

安装docker

五台机器都需要安装

yum install -y docker-ce docker-ce-cli containerd.io

sed -i "s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd#g" /usr/lib/systemd/system/docker.service

systemctl daemon-reload

systemctl enable docker

systemctl start docker

cat <<EOF > /etc/docker/daemon.json

{

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn"]

}

EOF

安装k8s

五台机器都需要安装

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet

systemctl start kubelet

初始化master节点

[root@vm-192-168-31-132 ~]# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.18.2

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

controlPlaneEndpoint: "k8s-api-lb.mydomain.com.cn:8443"

networking:

serviceSubnet: "10.96.0.0/16"

podSubnet: "10.100.0.1/16"

dnsDomain: "cluster.local"

[root@vm-192-168-31-132 ~]# kubeadm init --config=kubeadm-config.yaml --upload-certs

#...省略部分输出...输出的内容包含master与work节点加入集群的命令,这个命令也通过其他命令可以再次生成

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join k8s-api-lb.mydomain.com.cn:8443 --token 299r5l.80twk4nurs432c6j \

--discovery-token-ca-cert-hash sha256:459dfe0d089613c72e46251c0e3d3c0dd01aee9c96931b33dfce74202887c1c4 \

--control-plane --certificate-key 005e769210ac5b9a315386188c2d4699fd3b8371cbf6a7cd7dae3a321573143f

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join k8s-api-lb.mydomain.com.cn:8443 --token 299r5l.80twk4nurs432c6j \

--discovery-token-ca-cert-hash sha256:459dfe0d089613c72e46251c0e3d3c0dd01aee9c96931b33dfce74202887c1c4

# 配置 kubectl命令使用的配置文件

[root@vm-192-168-31-132 ~]# mkdir /root/.kube/

[root@vm-192-168-31-132 ~]# cp -i /etc/kubernetes/admin.conf /root/.kube/config

[root@vm-192-168-31-132 ~]# watch kubectl get pods -o wide -n kube-system

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-546565776c-4st6r 0/1 Pending 0 99s <none> <none> <none> <none>

coredns-546565776c-67qh5 0/1 Pending 0 99s <none> <none> <none> <none>

etcd-vm-192-168-31-132.mydomain.com.cn 1/1 Running 0 111s 192.168.31.132 vm-192-168-31-132.mydomain.com.cn <none> <none>

kube-apiserver-vm-192-168-31-132.mydomain.com.cn 1/1 Running 0 111s 192.168.31.132 vm-192-168-31-132.mydomain.com.cn <none> <none>

kube-controller-manager-vm-192-168-31-132.mydomain.com.cn 1/1 Running 0 111s 192.168.31.132 vm-192-168-31-132.mydomain.com.cn <none> <none>

kube-proxy-tqh66 1/1 Running 0 98s 192.168.31.132 vm-192-168-31-132.mydomain.com.cn <none> <none>

kube-scheduler-vm-192-168-31-132.mydomain.com.cn 1/1 Running 0 111s 192.168.31.132 vm-192-168-31-132.mydomain.com.cn <none> <none>

#等待所有pod running

执行kubeadm init后需要等待几分钟,kubernetesVersion我安装时是1.17.4,目前已经到1.18版本,请根据自己安装的版本进行配置。

安装网络插件

网络插件官方文档:https://docs.projectcalico.org/v3.13/getting-started/kubernetes/self-managed-onprem/onpremises

[root@vm-192-168-31-132 ~]# wget https://docs.projectcalico.org/v3.13/manifests/calico.yaml

[root@vm-192-168-31-132 ~]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

其他master节点加入集群

在133和134机器执行

[root@vm-192-168-31-132 ~]# kubeadm join k8s-api-lb.mydomain.com.cn:8443 --token 299r5l.80twk4nurs432c6j \

--discovery-token-ca-cert-hash sha256:459dfe0d089613c72e46251c0e3d3c0dd01aee9c96931b33dfce74202887c1c4 \

--control-plane --certificate-key 005e769210ac5b9a315386188c2d4699fd3b8371cbf6a7cd7dae3a321573143f

[root@vm-192-168-31-132 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

vm-192-168-31-132.mydomain.com.cn Ready master 13m v1.18.2

vm-192-168-31-135.mydomain.com.cn NotReady <none> 38s v1.18.2

vm-192-168-31-136.mydomain.com.cn NotReady <none> 48s v1.18.2

worker节点加入集群

在135和136机器执行

[root@vm-192-168-31-132 ~]# kubeadm join k8s-api-lb.mydomain.com.cn:8443 --token 299r5l.80twk4nurs432c6j \

--discovery-token-ca-cert-hash sha256:459dfe0d089613c72e46251c0e3d3c0dd01aee9c96931b33dfce74202887c1c4

[root@vm-192-168-31-132 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

vm-192-168-31-132.mydomain.com.cn Ready master 16m v1.18.2

vm-192-168-31-133.mydomain.com.cn Ready master 2m34s v1.18.2

vm-192-168-31-134.mydomain.com.cn NotReady master 41s v1.18.2

vm-192-168-31-135.mydomain.com.cn Ready <none> 3m25s v1.18.2

vm-192-168-31-136.mydomain.com.cn Ready <none> 3m35s v1.18.2

安装nginx-ingress

nginx-ingress官网:https://www.nginx.com/products/nginx/kubernetes-ingress-controller/ nginx-ingress Github:https://github.com/nginxinc/kubernetes-ingress k8s ingress文档 :https://kubernetes.io/zh/docs/concepts/services-networking/ingress/

[root@k8s-n1 ingress-nginx]# cat ingress-nginx-controller.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

# add following two line

tolerations:

- operator: "Exists"

nodeSelector:

kubernetes.io/hostname: vm-192-168-31-132.mydomain.com.cn

containers:

- name: nginx-ingress-controller

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:0.26.1

imagePullPolicy: IfNotPresent

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

hostPort: 80

containerPort: 80

- name: https

hostPort: 443

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

#注意kubernetes.io/hostname: vm-192-168-31-132.mydomain.com.cn 修改成你的机器

[root@vm-192-168-31-132 ~]# kubectl apply -f ingress-nginx-controller.yaml

namespace/ingress-nginx created

configmap/nginx-configuration created

configmap/tcp-services created

configmap/udp-services created

serviceaccount/nginx-ingress-serviceaccount created

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

role.rbac.authorization.k8s.io/nginx-ingress-role created

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

deployment.apps/nginx-ingress-controller created

[root@vm-192-168-31-132 ~]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

nginx-ingress-controller-7b7dff4898-xk89l 1/1 Running 0 5m53s

[root@vm-192-168-31-132 ~]# cat nginx-test.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

selector:

matchLabels:

app: my-nginx

template:

metadata:

labels:

app: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: my-nginx

labels:

app: my-nginx

spec:

ports:

- port: 80

protocol: TCP

name: http

selector:

app: my-nginx

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: my-nginx

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: nginx.test.com

http:

paths:

- path: /

backend:

serviceName: my-nginx

servicePort: http

[root@vm-192-168-31-132 ~]# kubectl apply -f nginx-test.yaml

deployment.apps/my-nginx created

service/my-nginx created

ingress.extensions/my-nginx created

[root@vm-192-168-31-132 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

my-nginx-6769b99754-jph8z 1/1 Running 0 14m

配置本机host 192.168.31.132 nginx.ronnie.com 浏览器访问nginx.test.com