大数据集群异常汇总

hbase

现象RegionServers节点角色无法启动

[regionserver/node4.work.com/192.168.31.135:16020] regionserver.HRegionServer: STOPPED: Unhandled: org.apache.hadoop.hbase.ClockOutOfSyncException: Server node4.work.com,16020,1591268474336 has been rejected; Reported time is too far out of sync with master. Time difference of 75886680ms > max allowed of 30000ms

解决方案: 主机间时间不同步,用ntpdate同步时间(或者搭建自己的时钟服务),常出现在个人虚拟机环境/usr/sbin/ntpdate cn.pool.ntp.org

查看yarn container 日志

linux系统本地磁盘存储位置由参数yarn.nodemanager.log-dirs决定

yarn.log-aggregation-enable 日志归集,开启的话,任务运行结束后日志会上传到hdfs中hdfs://app-logs/user_name/logs-ifile/application_id_number

impala同步hive元数据

invalidate metadata [table] 表结构改变 refresh [table] 表数据改变 refresh [table] partition [partition] 表的某一个分区数据改变 invalidate metadata比refresh更重量级

impala load metadat 异常

AnalysisException: Failed to load metadata for table: 'dm.dmr_sal' CAUSED BY: TableLoadingException: Unrecognized table type for table: dm.dmr_sal

Impala不支持ORC的表,查看impala支持 http://impala.apache.org/docs/build/impala-2.8.pdf

hue 卡死

在hive中设置hive.server2.parallel.ops.in.session=true,未设置此参数将导致多人使用hue同一个账号卡死。

hiveserver2

Caused by: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): Unauthorized connection for super-user: hive/hdpprdm02.example.com.cn@example.com.cn from IP 172.11.121.232

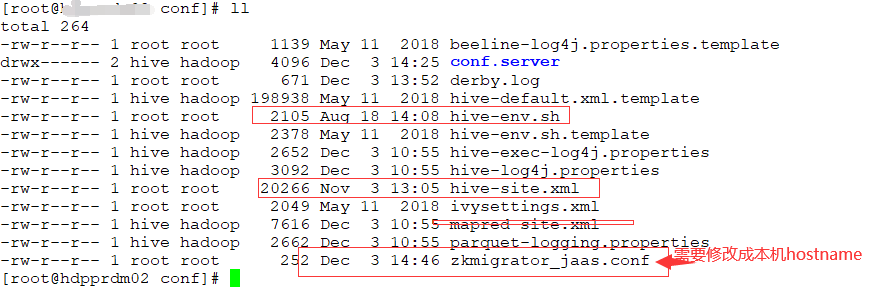

产生:ambari 2.6.5 hdp 2.6.5 hiveserver2已经配置了高可用,由于有个hiveserver2机器内存太小,新增加点,新的hiveserver2节点启动后,无法正常处理链接请求,并且报错。处理方法,从能用的hiveserver2节点cp缺失的配置文件到这个机器,并稍作修改:

hbase regionserver 重启

在ambari控制台看到hbase某个机器hbase经常端口连不上。查看hbase日志zookeeper.ClientCnxn: Session 0x0 for server xxxx.com.cn/xxx.xxx.xxx.231:2181, unexpected error, closing socket connection and attempting reconnect,查看zookeeper日志:Too many connections from /xxx.xxx.xxx.37 - max is 60

处理方法: 在ambari中zookeeper的Custom zoo.cfg 中定义 maxClientCnxns为240; 重启zookeeper

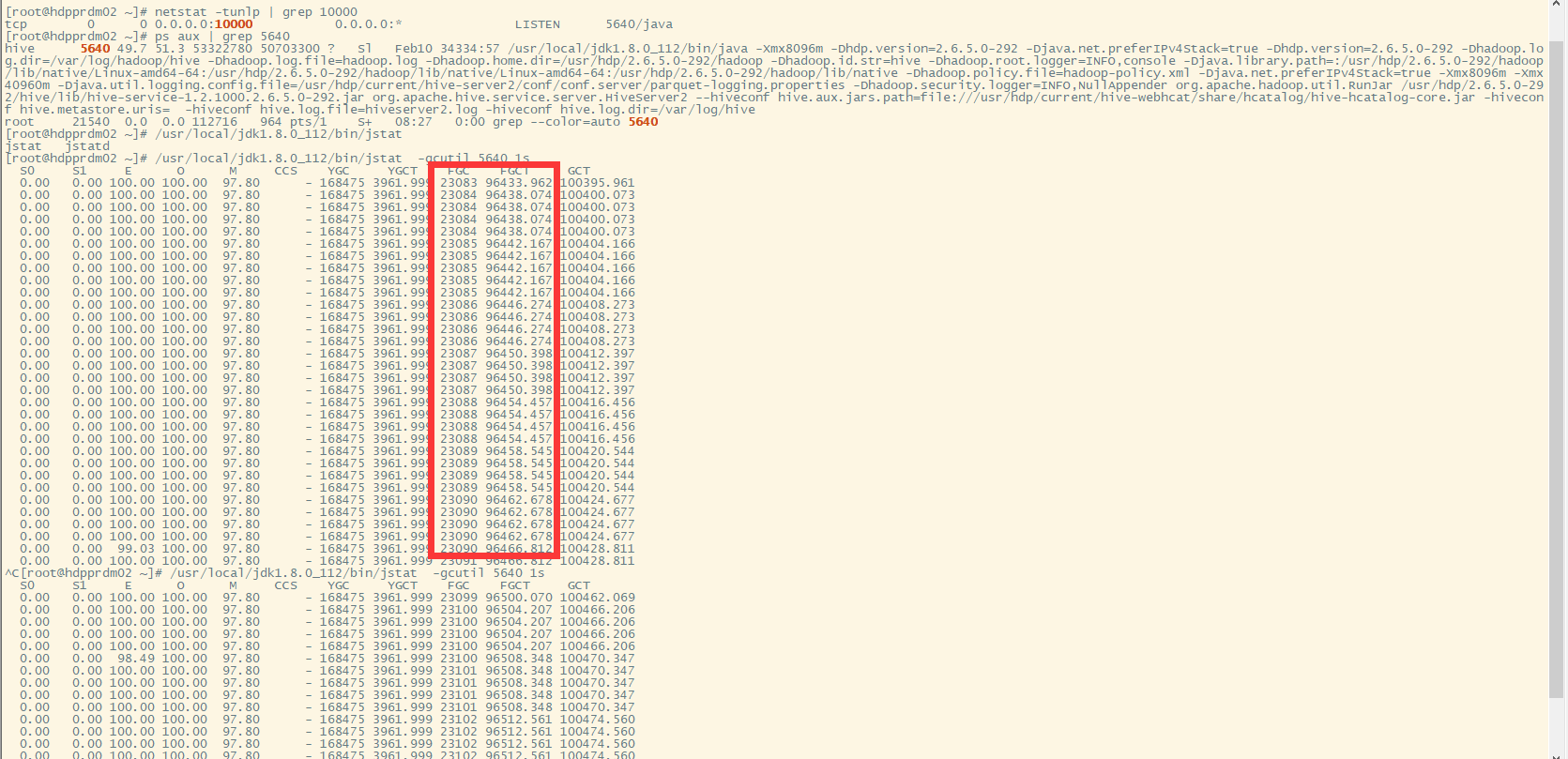

hive server2 FGC 卡死

现象,调度连接hiveserver2超时, beeline也无法连接,查看进程GC如下:

日志:2021-03-30 08:32:33,646 WARN [org.apache.hadoop.hive.common.JvmPauseMonitor$Monitor@4c6bba7d]: common.JvmPauseMonitor (JvmPauseMonitor.java:run(188)) - Detected pause in JVM or host machine (eg GC): pause of approximately 20311ms

解决方案: 增大xmx 设置xms与xmx一样大小,通过负载均衡连接hiveserver2 等。

hive server2 进程挂了

错误信息:

Caused by: java.io.IOException: Failed on local exception: java.io.IOException: Couldn't set up IO streams; Host Details : local host is: "aag.com.cn/172.xx.xxx.xxx1"; destination host is: "hdpprx.xxx.xxx.com.cn":39049;

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:785)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1558)

at org.apache.hadoop.ipc.Client.call(Client.java:1498)

at org.apache.hadoop.ipc.Client.call(Client.java:1398)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:233)

... 25 more

Caused by: java.io.IOException: Couldn't set up IO streams

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:826)

at org.apache.hadoop.ipc.Client$Connection.access$3200(Client.java:397)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1620)

at org.apache.hadoop.ipc.Client.call(Client.java:1451)

... 27 more

Caused by: java.lang.OutOfMemoryError: unable to create new native thread

at java.lang.Thread.start0(Native Method)

at java.lang.Thread.start(Thread.java:714)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:819)

... 30 more

处理方法:增加ulimit的 nproc 100000

[root@ hive]# vim /etc/security/limits.conf

* soft nofile 655350

* hard nofile 655350

* soft nproc 100000

* hard nproc 100000

#hive soft as 47185920

#hive soft as 47185920

hdfs清理磁盘

由于测试环境磁盘空间使用超过90%导致yarn 可用资源减少,部分NodeManagers节点失效。处理方法清理磁盘。

###清理spark的日志,删除后不会立刻释放物理磁盘空间,要等namenode检查后删除。

hadoop fs -rm -r -skipTrash /spark2-history/application*

###(设置hive目录副本数量为2, 测试环境可以使用),生产环境请勿使用此配置。

hadoop fs -setrep -w 2 /apps/hive